Synthesizing Music from JSON

tl;dr: pl_synth is a tiny music synthesizer for C & JS and an editor (“tracker”) to create instruments and arrangements.

You can try it out at phoboslab.org/synth/

Sonant is a brilliant piece of software. It gives you 8 tracks, where each track has its own “instrument” (just a bunch of parameters) and a number of patterns. You fill the patterns with notes and lay out the sequence of patterns. Out comes the music.

Sonant was made for the demo scene and is therefore very concerned about size: both the code size of the synthesizer as well as the data size needed to define the music. And even with its tiny size it still offers a lot of flexibility for the artist.

In 2011 Sonant was ported to JavaScript by Marcus Geelnard as Sonant Live and later forked as Sonant-X Live by Nicolas Vanhoren to support the newer WebAudio APIs in Browsers. I have used Sonant-X in Underrun, Voidcall and Q1k3 – each time condensing and refining it a bit more.

A few weeks ago I thought it would be nice to use it in C with high_impact too. Massaging the original C++ synthesizer was straight forward. The original tracker however only works on Windows and doesn't come with the source code; likewise the newer JS Sonant-X Live has aged quite a bit and isn't as good as it could be for modern Browsers. So I set out to write a new tracker from scratch.

Arranging Instruments

There are many different ways to lay out instruments and notes to define a song. In the simplest case you'd have a single list where each element defines an instrument, a note and the exact time that it's being played at. E.g.:

[

{"instrument": "piano", "note": "c-4", "time": 0.123},

{"instrument": "piano", "note": "d-4", "time": 0.456},

{"instrument": "piano", "note": "e-4", "time": 0.789},

// …

]This format would be quite tedious to edit and is not very space efficient. Typically, music has a lot of repeating patterns. So a layer of abstraction would be helpful to lay out patterns of notes, instead of notes directly.

In ZzFXM for example, each pattern has a number of tracks, where each track has a number of notes. A single overall list of patterns defines a song. This, in my opinion, is the wrong way around.

Instead, in Sonant (and thus pl_synth) a song consists of one or more tracks. Each track has a specific instrument and one or more patterns. Each pattern has exactly 32 rows, where each row may contain one note. A list of pattern indices for each track defines the overall sequence.

This, in general, matches the layout of a lot of music better. E.g. it allows you to have repeating drum beat track that is independent of the melody. It's also very compact as a file format and works nicely as a C struct.

pl_synth_song_t song = {

.row_len = 8481,

.num_tracks = 4,

.tracks = (pl_synth_track_t[]){

{

.synth = {7,0,0,0,121,1,7,0,0,0,91,3,0,100,1212,5513,100,0,6,19,3,121,6,21,0,1,1,29},

.sequence_len = 12,

.sequence = (uint8_t[]){1,2,1,2,1,2,0,0,1,2,1,2},

.patterns = (pl_synth_pattern_t[]){

{.notes = {138,145,138,150,138,145,138,150,138,145,138,150,138,145,138,150,136,145,138,148,136,145,138,148,136,145,138,148,136,145,138,148}},

{.notes = {135,145,138,147,135,145,138,147,135,145,138,147,135,145,138,147,135,143,138,146,135,143,138,146,135,143,138,146,135,143,138,146}}

}

},

{

.synth = {7,0,0,0,192,1,6,0,9,0,192,1,25,137,1111,16157,124,1,982,89,6,25,6,77,0,1,3,69},

.sequence_len = 12,

.sequence = (uint8_t[]){0,0,1,2,1,2,3,3,3,3,3,3},

.patterns = (pl_synth_pattern_t[]){

{.notes = {138,138,0,138,140,0,141,0,0,0,0,0,0,0,0,0,136,136,0,136,140,0,141}},

{.notes = {135,135,0,135,140,0,141,0,0,0,0,0,0,0,0,0,135,135,0,135,140,0,141,0,140,140}},

{.notes = {145,0,0,0,145,143,145,150,0,148,0,146,0,143,0,0,0,145,0,0,0,145,143,145,139,0,139,0,0,142,142}}

}

},

{

.synth = {7,0,0,1,255,0,7,0,0,1,255,0,0,100,0,3636,174,2,500,254,0,27},

.sequence_len = 12,

.sequence = (uint8_t[]){1,1,1,1,0,0,1,1,1,1,1,1},

.patterns = (pl_synth_pattern_t[]){

{.notes = {135,135,0,135,139,0,135,135,135,0,135,139,0,135,135,135,0,135,139,0,135,135,135,0,135,139,0,135,135,135,0,135}}

}

},

{

.synth = {8,0,0,1,200,0,7,0,0,0,211,3,210,50,200,6800,153,4,11025,254,6,32,5,61,0,1,4,60},

.sequence_len = 12,

.sequence = (uint8_t[]){1,1,1,1,0,0,1,1,1,1,1,1},

.patterns = (pl_synth_pattern_t[]){

{.notes = {0,0,0,0,140,0,0,0,0,0,0,0,140,0,0,0,0,0,0,0,140,0,0,0,0,0,0,0,140}}

}

}

}

};Creating Sounds from Thin Air

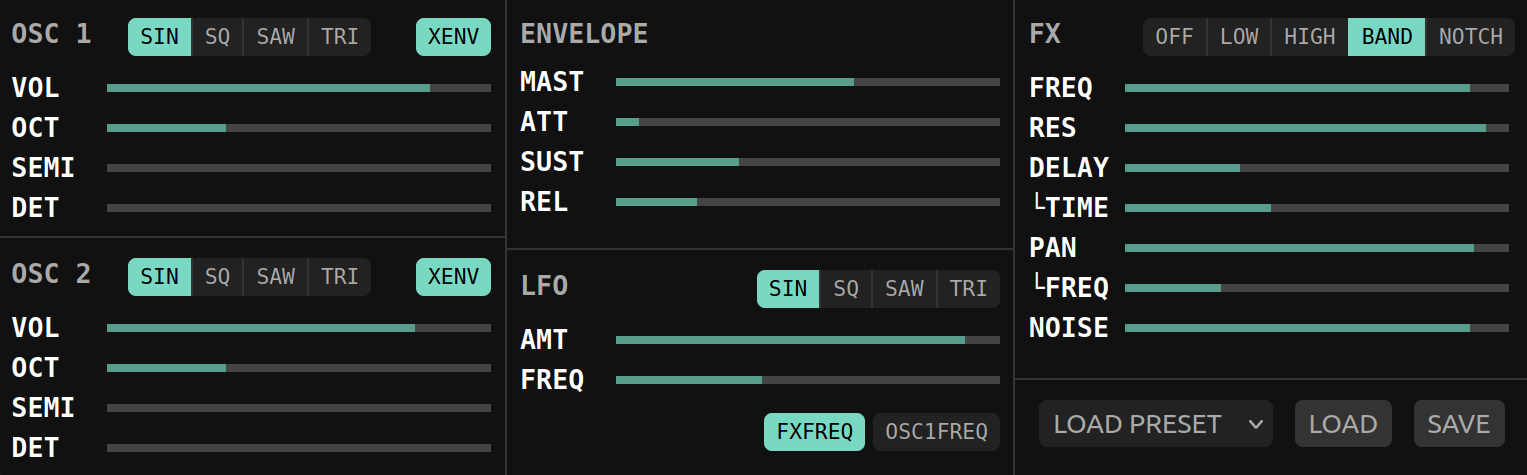

The heart of pl_synth is a loop that creates the raw PCM samples for a single note of an instrument. The instrument is defined by a bunch of parameters, most importantly the waveform (Sine, Square, Sawtooth or Triangle) and the frequency of two independent oscillators. The volume of those are defined by the envelope as three values: attack, sustain and release (how quickly that tone gets from 0% to 100% volume, how long it stays at 100% and how quickly it goes back to 0%). This all is directly adopted from the original Sonant C++ version.

A short sawtooth wave; the envelope is visualized by the grey area around it.

A short sawtooth wave; the envelope is visualized by the grey area around it.

On its own, this is not terribly exciting. Pure sine waves sound like telephone dial tones; a square wave sounds just like what a Gameboy might produce (which of course is no coincidence). But if you put a delay effect on it, modulate the oscillator frequency with a LFO (low frequency oscillator) and apply a band pass filter you can produce a surprising range of different sounds.

The whole loop that does all this is so short that I can reproduce it here in full (modified a bit for clarity). PL_SYNTH_TAB(waveform, pos) returns the value for a particular waveform at a particular position. E.g. for a sine wave it's just sin(pos). Each iteration of this loop produces one sample.

for (int i = num_samples; i >= 0; i--) {

float filter_f = fx_freq;

float envelope = 1;

float sample = 0;

// LFO

float lfor = PL_SYNTH_TAB(lfo_waveform, i * lfo_freq) * lfo_amt + 0.5f;

// Envelope

if (i < env_attack) {

envelope = i / env_attack;

}

else if (i >= env_attack + env_sustain) {

envelope -= (i - env_attack - env_sustain) / env_release;

}

// Oscillator 0

float cur_osc0_freq = osc0_freq;

if (lfo_osc_freq) {

cur_osc0_freq *= lfor;

}

if (osc0_xenv) {

cur_osc0_freq *= envelope * envelope;

}

osc0_pos += cur_osc0_freq;

sample += PL_SYNTH_TAB(osc0_waveform, osc0_pos) * osc0_vol;

// Oscillator 1

float cur_osc1_freq = osc1_freq;

if (osc1_xenv) {

cur_osc1_freq *= envelope * envelope;

}

osc1_pos += cur_osc1_freq;

sample += PL_SYNTH_TAB(osc1_waveform, osc1_pos) * osc1_vol;

// Noise oscillator

if (noise_vol) {

sample += rand_float() * noise_vol * envelope;

}

sample *= envelope * (1.0f / 255.0f);

// State variable filter

if (fx_filter) {

if (lfo_fx_freq) {

filter_f *= lfor;

}

filter_f = PL_SYNTH_TAB(0, filter_f * (0.5f / PL_SYNTH_SAMPLERATE)) * 1.5f;

low += filter_f * band;

high = fx_resonance * (sample - band) - low;

band += filter_f * high;

sample = (float[5]){sample, high, low, band, low + high}[fx_filter];

}

// Panning & master volume

float pan = PL_SYNTH_TAB(0, i * fx_pan_freq) * pan_amt + 0.5f;

sample *= 78 * env_master;

// Write sample

samples[i * 2 + 0] += sample * (1-pan);

samples[i * 2 + 1] += sample * pan;

}JS Performance

Of course producing each sample for each track, some with overlapping instruments, means a whole lot of iterations of this loop. Each operation in this loop has an impact on performance.

5000ms

Sonant-X Live, the JS port I first used in my game Underrun, was painfully slow. Generating all samples for a song typically took 5 seconds or more. Most of the time was spent in the oscillator functions. Turns out, calling Math.sin() ten million times for 60 seconds of music is not the best idea.

1000ms

For my next game, Voidcall, I got the time to generate music down to 1 second. How did I make Math.sin() so much faster? By not calling it at all, of course. Instead, I pre-generated a lookup table for each oscillator.

// Generate the lookup tab with 4 oscilators: sin, square, saw, tri

let len = 4096;

let tab = new Float32Array(len * 4);

for (let i = 0; i < len; i++) {

tab[i ] = Math.sin(i*6.283184/len); // sin

tab[i + len ] = tab[i] < 0 ? -1 : 1; // square

tab[i + len * 2] = i / len - 0.5; // saw

tab[i + len * 3] = i < len/2 ? (i/(len/4)) - 1 : 3 - (i/(len/4)); // tri

}All 4 oscillators are stored in one table. The lookup is then computed with an offset for the specific oscilator, e.g. offset 8192 for the sawtooth oscillator.

sample = tab[offset + ((pos * len) & 4095)];The bit and & 4095 is equivalent to modulo % 4096 but much faster. Hence the power of two table length.

4096 elements provide enough resolution even for very low frequency oscillators. E.g. a barely audible 20hz sound at 44100hz playback rate is just 2205 samples long.

500ms

I squeezed out quite a bit more performance by restructuring some of the if statements, merging multiplications and just making sure that there's no division or function calls in the inner loop. E.g.:

for (let j = num_samples; j >= 0; j--) {

// …

if (noise_fader) {

sample += (2*Math.random()-1) * noise_fader * envelope;

}

sample *= envelope / 255.0;

}became

let uint8_norm = 1 / 255;

let rand_state = 0xd8f554a5;

let noise_vol = noise_fader * 4.6566e-010; /* 1/(2**31) */

for (let j = num_samples; j >= 0; j--) {

// …

if (noise_fader) {

rand_state ^= rand_state << 13;

rand_state ^= rand_state >> 17;

rand_state ^= rand_state << 5;

sample += rand_state * noise_vol * envelope;

}

sample *= envelope * uint8_norm;

}250ms

The way to speed up the JS version even more is not using JS. I wrote a C version specially tailored to be compiled as a WASM module. Most of the song generation, JSON reading etc. is still handled by JS, but the performance critical part – the sound generation – is done in C.

The C source for the WASM module is just 200 lines of code and compiles to 2kb of WASM. The resulting synth.wasm is embedded into the JS source as a base64 string. This of course inflates the size again, but when delivered via gzip it doesn't matter.

The WASM version is about twice the size of vanilla JS version, but also twice as fast.

Fun fact: this WASM module also contains a function to clear a Float32Array.

void clear(float *samples, int len) {

for (int i = 0; i < len; i++) {

samples[i] = 0;

}

}In Firefox (as of v133) this is 10x faster than Float32Array.fill(0) in JS.

The WASM version compiles down to memory.fill – basically a memset. In contrast, Firefox/Spidermonkey implements TypedArray.fill() as a “self-hosted builtin” – that is: a JavaScript function, not native code. This is somewhat surprising to see, considering that TypeArrays were introduced specifically for performance reasons.

For what it's worth, there's no performance difference between TypedArray.fill() and the WASM version in Chrome.

25ms

I experimented with a version that would do everything through WebAudio nodes instead of generating raw samples. This turned out to be massive pain in the ass. Working with WebAudio is cumbersome and the code size exploded tenfold. This design by consortium node graph is just not my idea of fun.

Another approach would be generating samples on the fly using AudioWorklets. This is probably worth exploring in the future.

Native C Version

The native C version of pl_synth comes as a single header library. I took some care to make it allocator agnostic (i.e. all memory has to be provided by the caller), but other than that, there's not much to report.

Here's a short example of the C version that generates a song and stores it as .wav file.

The Tracker

The Tracker, the editor where you compose your songs, is where I spent most of my time with this project. It's written in plain old JS and comes as a single HTML file. There's no build step, no bundler, no npm install and no server needed. You just launch it with a doubleclick from your local file system (or course online at /synth).

All HTML is generated in JS through a simple h(type, props, ...children) function that returns a DOM element. children can either be undefined, a DOM element, an instance of a special HTMLComponent class or an array of those. HTMLComponent is simply a class that has a .element property for a DOM element. The whole UI is built with this simple setup.

E.g. the Track class – the UI element that holds all patterns and the instrument for a single track – looks like this:

class Track extends HTMLComponent {

app = null;

instrument = null;

index = 0;

patterns = [];

numDefaultPatterns = 4;

constructor(app, index) {

super();

this.app = app;

this.instrument = new Instrument(this);

this.index = index;

for (let i = 0; i < this.numDefaultPatterns; i++) {

this.patterns.push(new Pattern(this, i));

}

this.element = h('div', {class: 'row track'},

h('div', {class: 'head', text: 'Track ' + (index+1)},

h('span', {},

h('button', {class: 'small', text: 'rem', title: 'remove pattern', onclick: ev => this.onRemovePattern()}),

h('button', {class: 'small', text: 'add', title: 'add pattern', onclick: ev => this.onAddPattern()})

)

),

h('div', {class: 'patterns'},

this.patterns

),

this.instrument

);

}

// …

}Now, you're probably wondering how I re-render elements when something changes. The answer is of course using the DOM directly. Setting .textContent = 'foo' or calling .classList.add('zomg') is really no big deal. In fact, being so selective about these updates makes the whole thing feel quite snappy.

Undo / Redo

I spent a lot of time to make the tracker feel right. Adding a lot of keyboard shortcuts for playback, selections, copy, paste and of course undo & redo.

Implementing undo can be quite challenging, especially for more complex apps. But here, it simply stores the whole song for each step in the history. Each time a new entry in the history is created, the app is queried for the JSON string of the current song. On restore, the app simply loads the JSON string from history again.

Similar actions that occur within a short time span are grouped together as one entry in the history. This is done by:

- giving each action a description

- setting a timeout (500ms) before committing the action

- reset the timeout if another action with the same description is added

This all is managed in the History class, instantiated with a stateCallback that returns the current song JSON and restoreCallback that loads JSON data. The class looks like this:

class History {

levels = 100;

timeout = 500;

stack = [];

pos = 0;

stateCallback = null;

restoreCallback = null;

timeoutId = 0;

currentAction = null;

constructor(levels, timeout, stateCallback, restoreCallback) {

this.levels = levels;

this.timeout = timeout;

this.stateCallback = stateCallback;

this.restoreCallback = restoreCallback;

}

add(action) {

if (this.currentAction !== action) {

this.commit();

}

this.currentAction = action;

clearTimeout(this.timeoutId);

this.timeoutId = setTimeout(this.commit.bind(this), this.timeout);

}

commit() {

if (!this.currentAction) {

return;

}

if (this.pos) {

this.stack.splice(this.stack.length - this.pos, this.pos);

this.pos = 0;

}

this.stack.push({data: this.stateCallback(), action: this.currentAction});

if (this.stack.length > this.levels) {

this.stack.unshift();

}

this.currentAction = null;

}

undo() {

let prev = this.stack[this.stack.length - this.pos - 1];

let action = this.stack[this.stack.length - this.pos - 2];

if (!action) {

return null;

}

this.pos++;

this.restoreCallback(action.data);

return prev.action;

}

// redo() and some other features omitted

}The app then calls history.add() each time something is changed. For instance if you set a note in a pattern: history.add('set note'). If you don't change another pattern within 500ms, the action is committed to the history stack.

An important detail here is that you don't call history.add() with the state of the app. Instead, the History class specifically asks for the state (through the stateCallback()) only when it is truly needed. This ensures that we don't query the state of the app (potentially an expensive operation) more than necessary.

As an example, when you click and drag the volume slider for an instrument, each time you move the slider even just 1px the app calls history.add(). But this is not a problem: as long as you keep moving within 500ms nothing is committed yet; no state is queried.

Songs as URLs

The tracker can load songs in the “legacy” JSON format used by Sonant Live and Sonant-X Live e.g.:

{

"rowLen": 5513,

"songData": [

{

"osc1_oct": 7, "osc1_det": 0, "osc1_detune": 0, "osc1_xenv": 0, "osc1_vol": 192, "osc1_waveform": 3,

"osc2_oct": 7, "osc2_det": 0, "osc2_detune": 7, "osc2_xenv": 0, "osc2_vol": 201, "osc2_waveform": 3,

"noise_fader": 0, "env_attack": 789, "env_sustain": 1234, "env_release": 13636, "env_master": 191,

"fx_filter": 2, "fx_freq": 5839, "fx_resonance": 254, "fx_delay_time": 6, "fx_delay_amt": 121,

"fx_pan_freq": 6, "fx_pan_amt": 147, "lfo_osc1_freq": 0, "lfo_fx_freq": 1, "lfo_freq": 6,

"lfo_amt": 195, "lfo_waveform": 0,

"p": [1,2,0,0,1,2,1,2],

"c": [

{

"n": [154,0,154,0,152,0,147,0,0,0,0,0,0,0,0,0,154,0,154,0,152,0,157,0,0,0,156,0,0,0,0,0]

},

{

"n": [154,0,154,0,152,0,147,0,0,0,0,0,0,0,0,0,154,0,154,0,152,0,157,0,0,0,159,0,0,0,0,0]

}

]

},

{

"osc1_oct": 7, "osc1_det": 0,

…

},

…

]

}as well as in a new compact array representation or from a native C-struct source. The new array format is mostly equivalent to the legacy JSON format – just without property names and optionally with implicit 0 values. The latter makes this invalid as JSON, but still valid JS:

const song = [5513, [

[

[7,,,,192,3,7,,7,,21,3,,789,1234,13636,191,2,5839,254,6,121,6,147,,1,6,195],

[1,2,,,1,2,1,2],

[

[154,,154,,152,,147,,,,,,,,,,154,,154,,152,,157,,,,156],

[154,,154,,152,,147,,,,,,,,,,154,,154,,152,,157,,,,159]

]

],

[

…

]

]];Compressing the JS array format with CompressionStream and base64 encoding the result typically yields a string that is 300-1200 characters long (depending on the length and complexity of the song itself) – short enough to fit into a URL

So here's the soundtrack for Q1k3, all stored in the URL alone:

I have no doubt that this could be compressed further by using a binary format instead of JSON. Of course this would come at the expense of more code needed for the compression/decompression. For a JS13k entry your best option is probably the JS Array format, as your whole submission will be zipped up anyway.

pl_synth in high_impact

As stated in the beginning of this post, the initial reason for all of this was to provide an alternative for sound and music in high_impact. pl_synth is now bundled with high_impact and one of the demo games (Drop) uses it exclusively for sound and music.

Where the game previously loaded a sound file:

sound_source_t *coin = sound_source("assets/coin.qoa");it now generates a sound effect on startup:

sound_source_t *coin = sound_source_synth_sound(&(pl_synth_sound_t){

.synth = {10,0,0,1,189,1,12,0,9,1,172,2,0,2750,689,95,129,0,1086,219,1,117},

.row_len = 5513,

.note = 135

});No external sounds files needed anymore!

Check out pl_synth and high_impact on github. I hope to see this pop up in some future games!